Pointers for beginners to learn Apache Spark

I’ve tried to collect good links on Apache spark and it’s architecture here. I’ll be regularly updating this list as and when I come across new articles on Apache Spark.

Distributed Systems

Hadoop-Map Reduce paradigm

Official Apache Spark guide

Web resources, gitbooks and tutorials for Apache Spark

- **A nice brief gitbook on running spark from a USB stick in local mode: **How to light your ‘Spark on a stick’

- SparkSQL Getting Started

- Running Spark App In Standalone Cluster Mode

- Spark Recipes

- how to setup apache spark standalone cluster on multiple machine

- how spark internally executes a program

Papers published on Apache Spark

- Spark SQL: Relational Data Processing in Spark

- Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing

- Optimizing Shuffle Performance in Spark

Spark Architecture

- Spark Architecture

- Spark Misconceptions

- Spark Architecture: Shuffle

- RDD’s : Building block of Spark

- Spark DataFrames

Spark Topics about which one needs to be aware of, for building efficient data pipelines

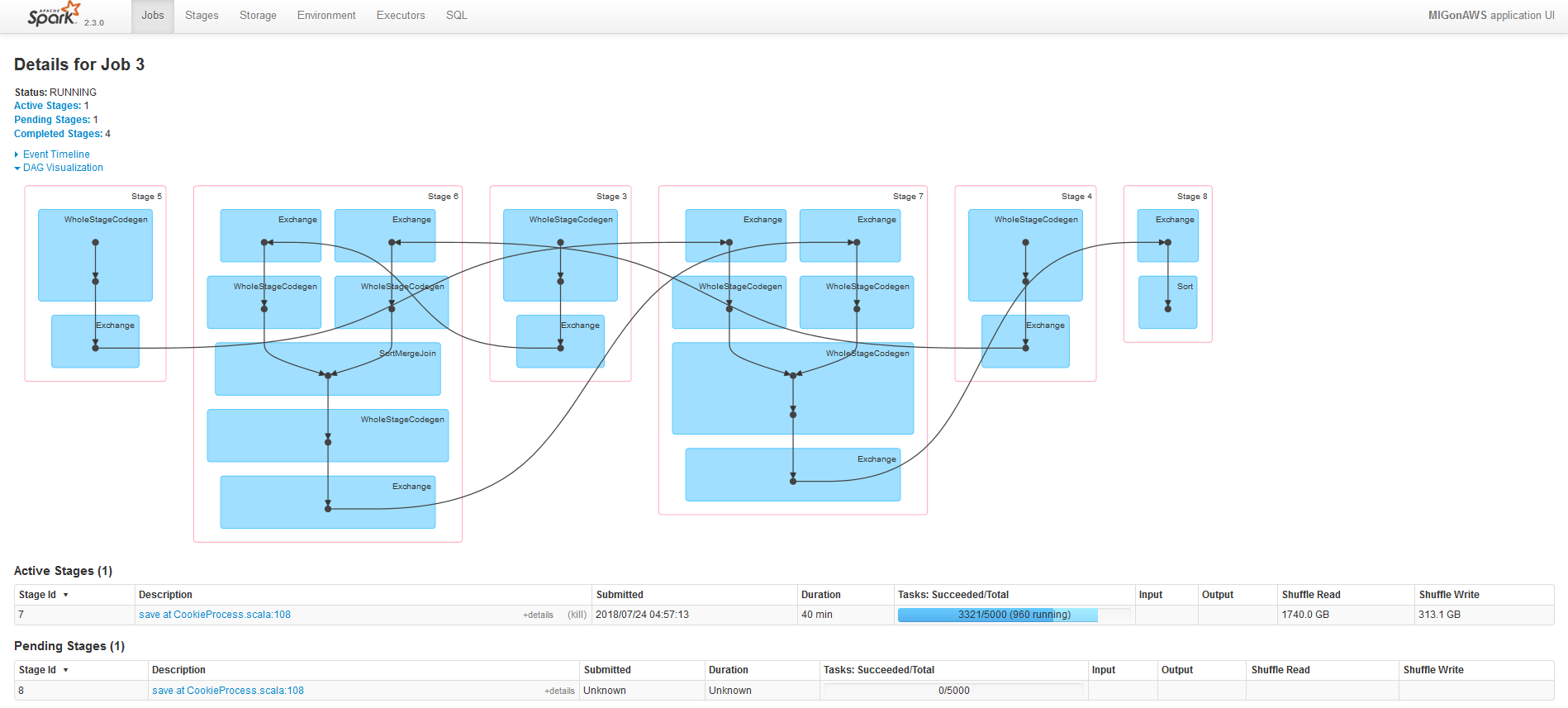

Spark Execution

Spark dynamic allocation

Spark Speculative tasks

Persisting and Checkpointing in Apache Spark

Spark Serialization

External shuffle service

Spark Partitioning

- An Intro to Apache Spark Partitioning: What You Need to Know

- Spark Under The Hood : Partition

- Partitioning in Spark

- Partitioning internals in Spark

Shuffling in Apche Spark

- All about Shuffling

- Another good article on shuffle by Cloudera : Working with Apache Spark: Or, How I Learned to Stop Worrying and Love the Shuffle

- In-depth explanation on Spark shuffle : Apache Spark Shuffles Explained In Depth

- You Won’t Believe How Spark Shuffling Will Probably Bite You (Also Windowing)

- A video on shuffle by Yandex on courseraShuffle. Where to send data?

- A brief coursera lecture on shuffling in Apache Spark : Shuffling: What it is and why it’s important

Tuning Apache Spark for performance

- Official Spark configuration page - version 2.3.xSpark Configuration

- How-to: Tune Your Apache Spark Jobs (Part 1)

- How-to: Tune Your Apache Spark Jobs (Part 2)

- Spark performance tuning from the trenches

- Tune your Spark (Part 2) jobs

- One operation and maintenance

Spark SQL

Introducing Window Functions in Spark SQL

Spark Operations

Commonly occuring errors and issues in Apache Spark

Most common Apache Spark mistakes and gotcha’s

Spark input splits works same way as Hadoop input splits, it uses same underlining hadoop InputFormat API’s. When it comes to the spark partitions, by default it will create one partition for each hdfs blocks, For example: if you have file with 1GB size and your hdfs block size is 128 MB then you will have total 8 HDFS blocks and spark will create 8 partitions by default . But incase if you want further split within partition then it would be done on line split.

On ingest, Spark relies on HDFS settings to determine the splits based on block size which maps 1:1 to RDD partition. However, Spark then gives you fine grain control over the number of partitions at run time. Spark provides transformation like repartition, coalesce, and repartitionAndSortWithinPartition give you direct control over the number of partitions being computed. When these transformations are used correctly, they can greatly improve the efficiency of the Spark job.

when reading compressed file formats from disk, Spark partitioning depends on whether the format is splittable. For instance, these formats are splittable: bzip2, snappy, LZO (if indexed), while gzip is not splittable.

Running Apache Spark on EMR

Apache Spark on EMR with S3 as the storage is a best combination for executing your ETL tasks in cloud these days. Running Spark on EMR takes away the hassle of setting up a spark/hadoop cluster and it’s administration. Also it comes with auto scaling feature.

So a regular spark execution on EMR looks like this:

Spawn a new EMR cluster considering the resources required for your job. Select the latest Spark version and other tools like Hive, Zeppelin, Ganglia. Pass the necessary configurations for spark and yarn which need to be loaded during the bootstrap process. Once the cluster is up, simply run your spark applications using Step execution, AWS lambda or spark-submit.

- Discusses about shuffle, task memory spill in EMRTuning My Apache Spark Data Processing Cluster on Amazon EMR

- Tuning Spark Jobs on EMR with YARN - Lessons Learnt

- Setting spark.speculation in Spark 2.1.0 while writing to s3

- Submitting User Applications with spark-submit

Apache Spark best practices

- Spark Best Practices

- Lessons From the Field: Applying Best Practices to Your Apache Spark Applications

- Spark Best Practices

- Spark best practices

- Best Practices for Spark Programming - Part I

- Apache Spark - Best Practices and Tuning